Table of contents

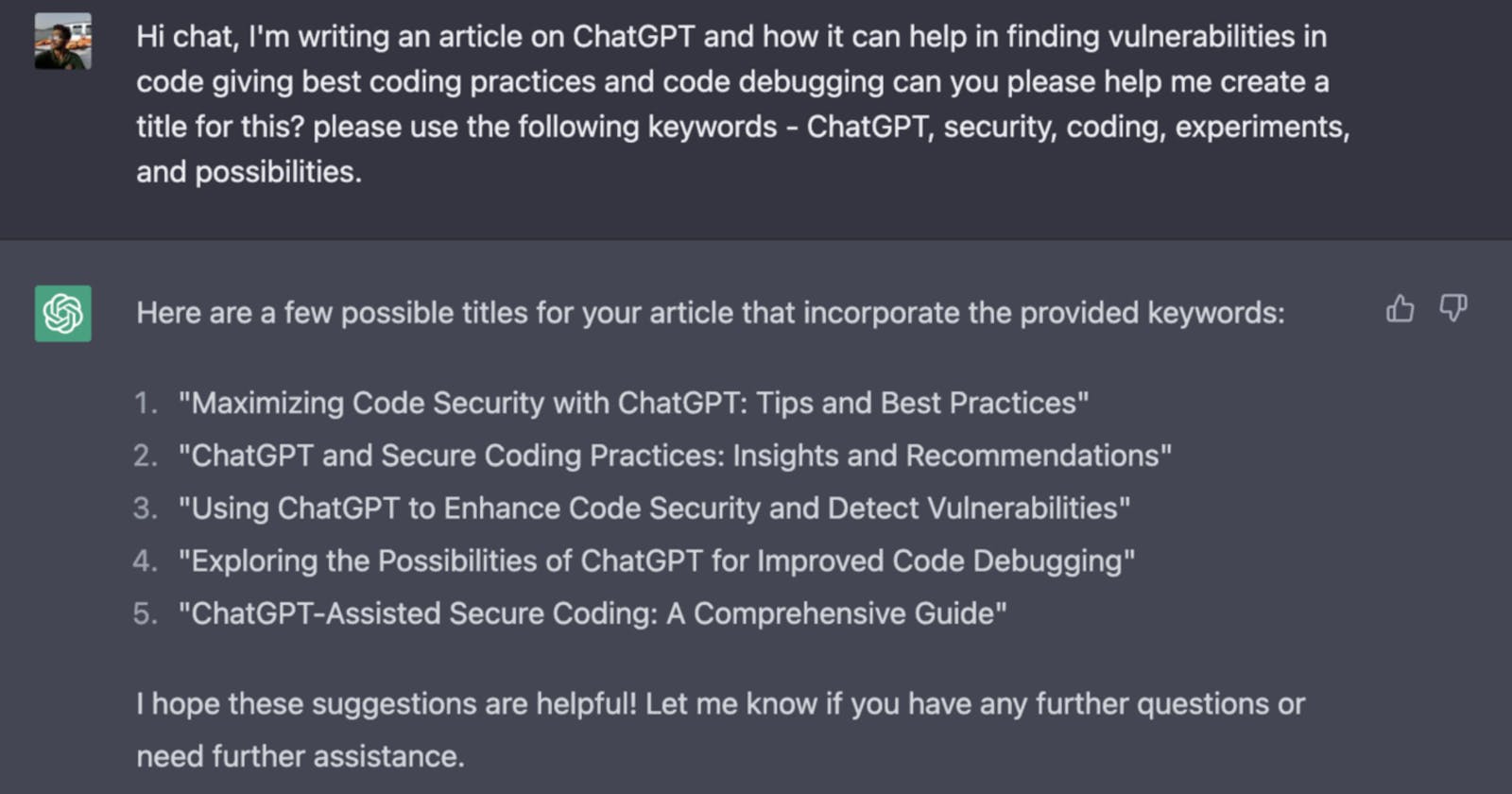

All right, hopefully, the cover image sets a mood for this article. I might've been late to the ChatGPT hype train which, I'm not gonna lie, sure has hit everyone like a legit BULLET Train. Not to mention the huge commotion going on Twitter of people showing that ChatGPT can solve half of the world's problems and how it is a threat hanging around on developers' necks coming for their jobs anytime soon (again), not the first time the devs have been threatened right? (**Whispers** Github co-pilot).

So what is it actually and its abilities?

Just for the audience unfamiliar with the topic, ChatGPT is an extensive language model trained by OpenAI and is specifically designed for chatbot applications. But it's kinda more than just a chatbot, it is trained to generate human-like responses to prompts given in the form of natural language. It has been fine-tuned on a large dataset of human-to-human conversation data to improve its performance in the context of chatbot applications.

Actually, it is a variant of the GPT-3 (short for "Generative Pre-trained Transformer 3") language model (also by OpenAI). The only difference is that ChatGPT is a specialized version of GPT-3 that is optimized for chatbot applications, while GPT-3 is a more general-purpose language model that can be used for a wide range of natural language processing tasks.

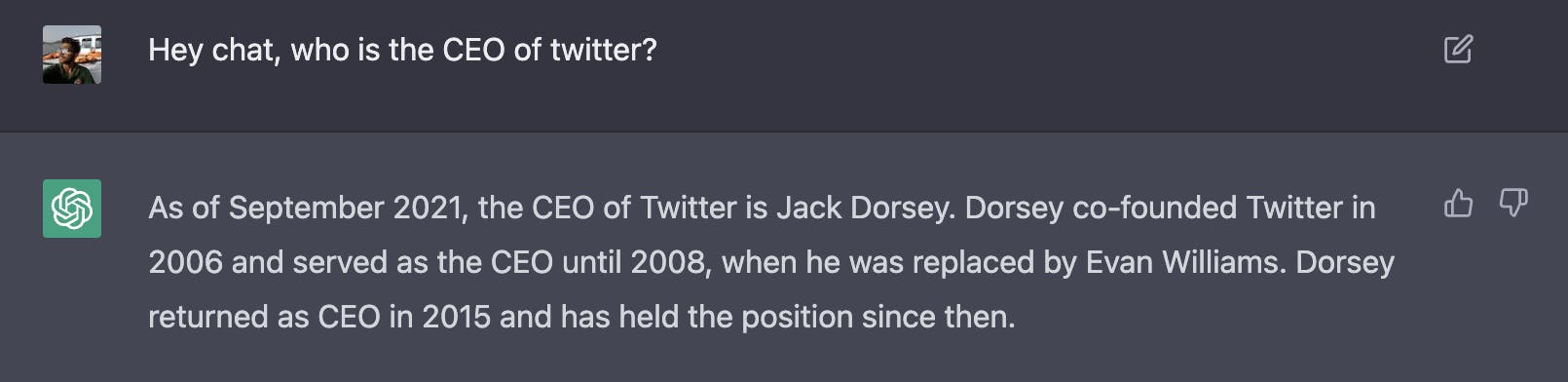

Another important thing to note is that the model is trained on an extensive amount of data till 2021, you ask it for any factual information till 2021 it gives you the answer-

And for any intel that goes beyond that threshold, it blanks out -

So to sum up the intro, the purpose of it is to comprehend statements by the users and provide a simplified answer.

It can write stories, blog posts, poems in the literature of a poet and in a tone of a hip-hop artist, and many more things based on the context and intel you provide to it. Basically, you can have conversations with it and it will give you answer in textual format, like Google, and in many cases in a much more simplified manner than that.

Okay! Honestly, just a few days I wasn’t even aware of this model because me being a bit out of touch with the AI domain since my time at university, so when I saw the internet spewing their brains out about just some model that was a chatbot; I just thought that this was just another of those internet fuzz like which has come to exist just to pass away.

Well.. not until I was going through the content of a couple of security folks on Youtube (live underflow and fuzzing labs).

In short, the guys were showcasing how ChatGPT is able to carry out the solution for tasks related to coding; this included finding vulnerabilities in smart contracts, simplifying complex Regex, solving CTF problems, finding out a correct payload to escape XSS filters, Finding out what Elon is going to buy and kill next (jk! It’s not going to sell out its godfather right? 😂)

All right! from the videos above we get an idea that ChatGPT could potentially learn to identify vulnerabilities right? But the catch is that it only works well if enough annotations and data are provided to it.

Just to give you an idea, below are the screenshots from Twitter where a user was trying to find an exact payload to exploit a buffer overflow in the code and get the flag.

Quick revision for the buff. flow - when a user enters data that has a length more than the code can hold, the code crashes and the attacker can possibly execute arbitrary code)

The user went ahead and loaded the source code and provided it something to work with, in this case, it was an arbitrary address of function where the flag is stored and the architecture of the target system -

Surprisingly enough the model was capable enough to make sense of the source code provided to it. Furthermore, it also put the pieces together with the extra information provided to it which is the address of the function that the user wants to call.

Great! But in the following conversation, we see something more mind-blowing, the user went beyond this comprehension and provided the structure of the stack with the intent to find the exact payload to exploit the code -

I was bamboozled, you can just give logic and give some more specific information for it to work with, and boom! you get yourself a human-like talking debugger to ask what is going on in it and what is wrong with it, the first thought that crossed my mind was that it opens up a whole new path on findings 0 days and ultimately CVEs.

The Experiment

Naturally, I had to give it a try, so I did what every sane person would do and put aside all my critical tasks, and decided to take dive into this rabbit hole.

So for our “educational purposes”, I picked up an old version of Memcached (1.4.15, released on 5th September 2012) which is in fact vulnerable to a buffer overflow on the process_bin_append_prepend function.

Right off the bat, I started off to ask basic questions like -

Seems like it had some previous insight on this, so I proceed to ask ambiguous questions to it to set an intention of the conversation -

Sure, it was hesitant in the beginning, ranting about ethics and un-lawfulness but we are doing this for educational purposes r..right?

As we learned above that it needs something to work with, so anyway I just started blasting it with the source code -

Some specific files -

Some more specific files -

And hold your breaths folks -

It actually did it!? I was in the same state of awe just like you right now. I had no clue that it was going to be this easy. HOW DID it find out just by code snippet?

So what is happening here? Even though we won’t be going much deeper into the exploit-making as of now like the good sire/madam we saw in the first example, since it’ll just be out of the scope of this post; it seems evident that this thing works as it says, given the fact that it provided sufficient information to work with.

Ultimately, this tool can be a great asset for you whether you are trying to find a flaw in a code snippet or doing some research work and to be fairly honest with your readers 70 percent of the content I’ve added in this article has come from me asking a large number of questions to this tool. So, let’s be real guys, things are being done by it. A lot of my friends who are developers are using this thing to pre-create code boilerplates and readme.MD (s) which are in fact trivial and redundant tasks. So it’s good to give it a go right? I know some of you are going like this -

“that’s that man, I am using this in my projects to code with zero bugs at my work from now onwar-“, yeah, no.

Don’t do that, you see this chat has a centralized memory, so whatever you’re going to feed it, there is a likelihood that’s it going to stay in its memory, and even though the fact that it is a pre-trained model and that there are moderators to keep this thing functioning so that no such data stays in there, but you certainly won’t like to be the person who’d risk the source code to the public right? Moreover, I’d strongly suggest you should go look at the secure coding policies of your workplace before hopping on this thing with any insider code.

Now I guess we can agree on the final takeaways -

While ChatGPT may not be the perfect tool for source code analysis and vulnerability detection, however, if enough information is provided for it to work, it is capable of understanding and generating solutions in human-like language. So it could potentially be used to help with these tasks in some capacity.

This could be useful for developers who need to quickly understand the purpose and function of a piece of code without having to read through it line by line. This could potentially solve the problem with the forbidden legacy code with no docs or comments 💀.

Add how about the idea that it could be a good debugging companion?

For bounty hunters, it could be another tool on their belt for findings vulnerabilities.

In terms of ethics and policies, ChatGPT is just a tool, and like any tool, it can be used for good or bad purposes. It's up to the users of the tool to ensure that it's being used in a responsible and ethical manner. If ChatGPT is being used for source code analysis and vulnerability detection, it's important to make sure that the model is being trained on high-quality, accurate data, and that the results of its analysis are being used responsibly.

Hope you found this little article useful.

Cool links you might want to check out:

ChatGPT: https://chat.openai.com/chat

Official website: https://openai.com/blog/chatgpt/

OpenAI: https://openai.com/

OpenAI Discord: https://discord.gg/openai

Connect with me on -

Twitter : https://twitter.com/Vivek98Vivek

LinkedIn: https://www.linkedin.com/in/vivek-choudhary-349601112/

Thanks for reading!

#chatGPT #security #applicationSecurity #hacking